Are You A Sucker for the Unexpected? 🤔 How to Deal with Black Swan Blindness

Wisdom Letter #81 | The One about The Black Swan

Hello and Welcome to The Wisdom Project. Your weekly dose of human-curated wisdom in a world full of algorithmic noise.

A Blurb on Nassim Nicholas Taleb’s book “The Black Swan” goes something like this👇

“A fascinating study of how we are regularly taken for suckers by the unexpected” — Larry Elliot, Guardian

At some level, we are all suckers for the unexpected, including even Taleb.

Today, we look at how we can do less of that.

What is a Black Swan, why are we blind to it, and what can we do about it?

Read On.

What is A Black Swan?

A Black Swan is an event that is highly improbable, extremely impactful, and obvious in hindsight.

Let’s break it down.

There are 3 elements to a black swan

Improbability

Extreme impact

And Obviousness when looking back.

Think of extreme events that have changed the world over the last 2000 years, they all can fall into this basket. (and they are not necessarily negative)

Events right from the founding of Christianity and Islam, to the invention of the internet and Cryptocurrencies.

And of course, the Corona Virus pandemic.

The Improbability

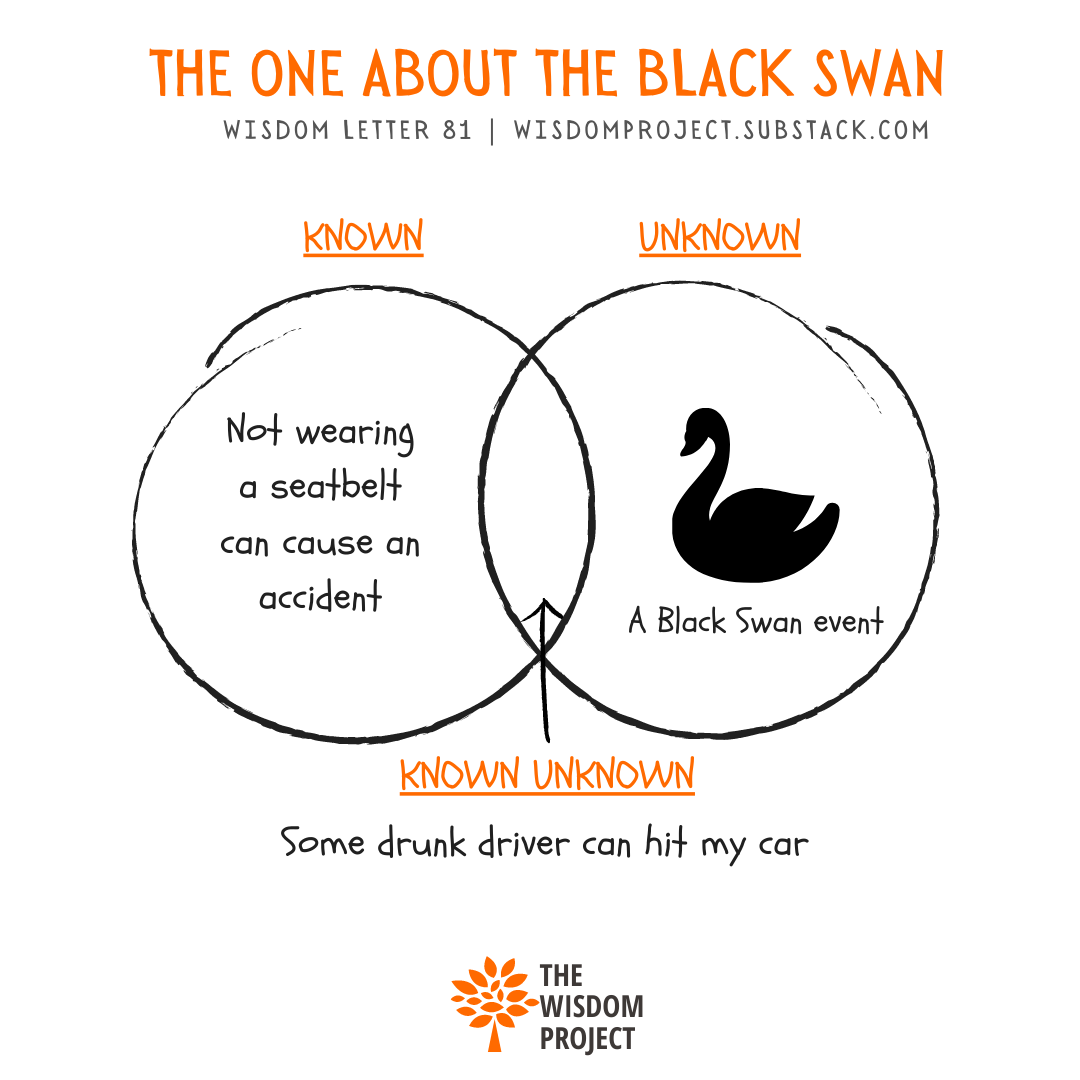

Black Swans lie in the realm of the ‘unknown unknowns’

There are certain risks we know about, then there are some risks we know we don’t know about, and then there are some risks we don’t know that we don’t know about.

They are inherently unpredictable. We cannot predict them with our current understanding of the world.

This happens because of the way our brains work.

Our brains are pattern-matching machines.

They thrive on existing patterns they have stored in memory. And they use this stored information to predict future events.

Our brains aren’t any good at predicting events for which there are no stored patterns. This is also why we are so shaken up by them.

Think of the predictability of the 9/11 attack, or the covid pandemic just 1 day or 1 week before they hit the world — Any “guru” would have said, they are highly improbable.

We are limited by our knowledge of the world.

The worst-case scenario we can imagine will only expand upon the existing knowledge we have.

We can't imagine what we don't know about.

And that's where black swans come from.

If you had not heard of flu pandemics before, and how they worked, then they were 'unknown unknowns' for you.

The Impact

Black Swans have extreme impacts. Both globally as well as in personal life.

There are many unknown unknowns in the world, but very few of them actually have an extreme impact.

Imagine how airport check-ins, or geopolitics, or American culture & society have changed since 9/11.

Or how mask use has changed since Covid-19.

Personally, stuff like the person you get married to, or the career you choose end up defining the course of your life. And think about all the variables that would need to fit together for you to arrive at those junctures in life.

The Obviousness

This is the most tricky part.

Once a black swan event has occurred, it seems so obvious in hindsight.

Our brain updates its store of patterns with this information and falls into hindsight bias.

We connect the dots looking back and create a narrative leading up to a black swan.

And then we tell ourselves, this is kind of obvious.

Once you know how 9/11 happened, how the planes were hijacked, and how they crashed into the twin towers, it seems so obvious, you almost feel like it was always going to happen, why did we not see it before? Why did we not have more security before?

Or how the coronavirus spread last year, and how the world reacted to it, in hindsight, it’s obvious that the virus was always coming. At least to the epidemiology community.

Heck, there’s a Netflix documentary of 2019 with a line that goes something like this — “There are only 3 things that are inevitable in this world: death, taxes and flu pandemics”

Bill Gates predicted a flu pandemic in a Ted Talk 5 years ago.

And it’s like we all watched that Talk only in 2020 :P

Why Are We Blind to Black Swans?

The ‘obviousness’ is the tricky part here. Because it gives us a false sense of predictability.

After every black swan event, we think that this event was actually predictable, we missed it this time, no issues, we will do better next time.

We tell ourselves that this was a miss, if only our models of prediction were better, we could have seen this coming.

But that is in fact false.

No model can work on the data it does not have. And this includes the models our brain uses for thinking.

In fact, this is data that the model "cannot" have beforehand.

The next black swan will not be a terrorist attack, it won’t be a flu pandemic, and it won’t be a financial meltdown either.

Then what will it be?

It can be anything.

Take a wild guess, civil war in China, or a software virus that spreads via Whatsapp messages and Tweets, or some kind of fast-acting climate disaster.

The problem is, even my wildest guesses are driven by my current knowledge, the current patterns stored in my brain.

By definition, we are blind to future Black Swan events.

So What Can We Do?

We can prepare.

We can build redundancies & margins of safety.

Assume all our models of risk are limited. Including our own brain.

The way our brain calculates risk is only based on the information it has, it "knows" about.

We should keep scope for "unknown unknowns" to come into our life and change it forever.

At a global level

It’s hard to predict the next black swan and prepare for it individually for a global black swan. The least you can do is listen to the experts, the scientists who are raising alarms.

And give them a platform to talk about unforeseen risks.

They may sound like fear-mongers, but even if 1 in 1000 of such ‘alarmists’ is accurate, his theories will end up changing the world.

At a personal level

Buy way more insurance than you think you need.

I think the one lesson we all can learn from the black swan effect, is to prepare for the worst.

You know the worst thing that can happen to you is that you die. Or lose a limb in an unforeseen accident. The least you can do is get some insurance for those circumstances, so your loved ones get some support.

Don’t over-optimize.

Taleb has this great line where he says if humans designed the human body, then we would only make one kidney, one hand, and one leg.

The proverbial MVP.

Mother nature is not blind to black swans.

It has redundancies built-in, it has large margins of safety — the human body is a great example of that.

Mother nature is Anti-Fragile.

But that is for another day😅

One more thing we can do is not take obvious avoidable risks.

Stuff like using seat belts, or helmets when on the road.

Or not indulge in tobacco or excessive alcohol, or drugs.

But now we are gradually entering the realm of knowable ignored risks.

Which are a different beast altogether — The Grey Rhino. We will talk about that next week.

Signing Off, here’s a quote from Taleb’s Black Swan that we should all think about a lot more:

“If you expect a disaster, you prepare for it and that averts it. The real danger you have is from disasters you can't know — The unknown unknowns.”

Thank you for reading🙏

Recommendations

📖 Whenever I think of risk, I go back to this article from Morgan Housel, it’s not about finance, it’s about personal risk, it’s very moving — The Three Side of Risk

📺 This video is an interesting take on our understanding of risk — An enhanced understanding of risk.

📑 There’s a cool story around why Taleb chose the term “Black Swan” to describe these events, read it on Wikipedia.

🐦As a fun little experiment, we got an AI copyrighter to write an intro for this post. We gave it the topic and short description, and within seconds it gave us 6 possible intros.

All 6 of them were good enough to have made it to this post.

Though we didn’t include them in the post, we did Tweet them out. The results are both fascinating and scary.

Maybe the next Black Swan will be Artificial General AI.

What are You Reading?

Last week we got a few recommendations from our readers about what they are reading.

It was amazing, we loved that💗

So we are starting this new section where we will feature a few reader recommendations every week.

This is what we got last week:

The Power of Habit — Charles Duhigg

Xanadu — William Dalrymple (twice)

City of Djinns. — William Dalrymple

If you think what you are reading/watching/listening to can interest other people as well, do let us know, just hit reply, and let’s get talking.

You can choose to stay anonymous or we can link to your social profile if you want.

So What are you reading?

Related

Last year we wrote and curated a couple of posts around risk and uncertainty and the black swan effect:

Of Black Swans and Grey Rhinos | Wisdom Letter #28

Swans, Rhinos & The Elephant in the Room | Wisdom Letter #47

Important Note

If you are a new subscriber, make sure to drag this email into your primary inbox, otherwise, you might miss future wisdom letters.

ICYMI 👉 Knowledge vs Wisdom | Wisdom Letter #80

This Week Last Year 👉 The Way We Work | Wisdom Letter #29

Hope you liked today’s post, if there are any improvements we can make, do let us know by replying to this.

We love listening to feedback.

This was Wisdom Letter #81. In case you want to revisit any of the previous 80 letters, check out our entire archive.

Love,

Aditi & Ayush